I’ve stopped using ChatGPT.

Well, not entirely… but I haven’t hit it in several weeks after the month-or-so ago pledge that I made promising, personally, that I’ll only use it for absolute emergencies when search fails me. And I hesitate even in those situations. (The last time I used it was for a SQL query that confounded me. It answered perfectly and I now understand how to solve that particular RDB situation.) My Mastodon feed is full of LLM haters who arrived at their position primarily because they think it produces too much garbage. Of those haters, 99% just misunderstand or misrepresent the capabilities and limitations–likely because our society is the Wild West of over-promising new technology–and the haters have taken to quoting the most absurdly iconoclastic views. In a word: insincere. There is a reasonable approach to take to approach reasonably new tech that is out there.

My personal ban is based on environmental impact. The LLM technology and services introduce energy consumption issues that are costly first in the training of their models, and second in the amount that their services are exercised, by simple or complex queries. With what we know currently, their energy consumption appears to be offensively demanding.

I have for a long time used “technologist” an epithet for the group of technology-informed people and for any who are both geek-adept and connectors to those who are not. It’s a bit or more-than-a-bit arrogant sounding, but I use it without that intention and the word “geek” has become too cutesy. Technologists are responsible for understanding and responsibly communicating the uses and implications of these new technologies that have come, primarily, from computers (but also: cosmology and astrophysics, energy production, cellphone and broadcast technology, etc.). They’re mavens. As such, they have a responsibility to understand to a certain degree these New New Things that are introduced into polite society, and to intelligently communicate their intent to those who aren’t in the tech community. Pop-science reporting is so often garbage; pop-tech reporting is much, much worse.

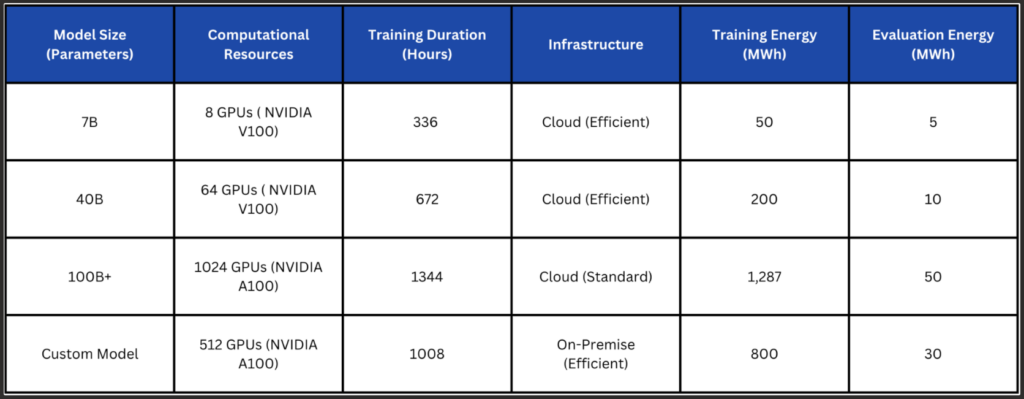

From the article How Much Energy Do LLMs Consume? Unveiling the Power Behind AI at Association of Data Scientists:

[T]raining GPT-3, which has 175 billion parameters, consumed an estimated 1,287 MWh (megawatt-hours) of electricity, which is roughly equivalent to the energy consumption of an average American household over 120 years.

Hope that this will all get solved through innovations in efficiencies, either by more optimal training algorithms or hardware, is the same as those hopes over the past decades that climate change effects could be mitigated in the same manner. This didn’t happen and such thinking can lead to more systemic failures. From the article Generative AI’s Energy Problem Today Is Foundational at IEEE Spectrum:

We need to consider rebound effects, de Vries says, such as “increasing efficiency leading to more consumer demand, leading to an increase in total resource usage and the fact that AI efficiency gains may also lead to even bigger models requiring more computational power.”

Although the environmental impact should be the primary concern, most complaints about LLMs have fallen into three categories. First, and most commonly and publicly discussed, that they steal copyrighted material. The inclusion of Hollywood and celebrities in this legitimate concern clearly put this in the public consciousness. Copyright is such a fraught issue because it is so abused by media companies. Yes, theft is bad, but when you sell someone a digital version of something and then years later degrade its use or take it away, it becomes obvious that theft goes both ways.

Second, a little more wonky, is that the answers given are anywhere from imprecise to dangerous. This second concern receives a lot of weight because of the popularly reported absurdities such as putting glue in pizza or eating rocks (it’s almost always food-related). These instances are likely a minor, minor fraction of what actually gets answered, but when it comes to safety those fractions matter. That said, the correct answers are so effortlessly correct and at times unobtainably correct–answers where you would otherwise need to learn an entirely new professional skill–that those fractions matter less. I mean, who is going to put glue on their pizza? And if they do… well, there are disclaimers for a reason.

To that point, technologists need to be responsible morally in their use of technology and, ecologically, the use of this technology is irresponsible.

Finally, the article No one’s ready for this at The Verge brings up a less understood, but equally concerning social issue with text-to-image generators. Two years ago DALL-E was everybody’s fun prog metal album cover generator and it has since subsided into an everyday distraction and a job killer for illustrators. The author of the article in The Verge, in reviewing the new Reimagine tool in Google’s Pixel 9, discusses the implications of such a mass-market Johnny Appleseed-ing of a text-to-image being embedded in everyone’s camera phone. They note that it effectively zeroes out any hope of phones continuing to be a tool of social justice and accountability.

You can already see the shape of what’s to come. In the Kyle Rittenhouse trial, the defense claimed that Apple’s pinch-to-zoom manipulates photos, successfully persuading the judge to put the burden of proof on the prosecution to show that zoomed-in iPhone footage was not AI-manipulated.

No one knows how this will alter society but, unfortunately, the choice of whether LLMs and text-to-image can be reigned in is the same choice whenever any new technology is developed. There is none.

Updated 23 Sep 2024

THE DEPARTMENT OF ENERGY WANTS YOU TO KNOW YOUR CONSERVATION EFFORTS ARE MAKING A DIFFERENCE (McSweeney’s, 10 Sep 2024)

Sometimes the obvious can be pretty funny if it’s dark enough.

By handwashing your dishes instead of using your dishwasher, you made it possible for an elaborate, four-story digital billboard in Times Square to advertise a seven-dollar bottle of water for twelve seconds. The display runs 24-7, naturally, but you personally contributed twelve seconds of that. You are making a difference!

AN AI EVENT HIRED JOHN MULANEY TO DO A COMEDY SET AND HE BRUTALLY ROASTED THEM ONSTAGE (Futurism, 23 Sep 2024)

And angry.

“Some of the vaguest language ever devised has been used here in the last three days,” he said. “The fact that there are 45,000 ‘trailblazers’ here couldn’t devalue the title anymore.”

AI DATACENTERS MORE THAN 600 PERCENT WORSE FOR ENVIRONMENT THAN TECH COMPANIES CLAIMED

For good cause.

AI leaders including Microsoft, Google, Meta, and Apple, are about 662 percent higher than what they’ve officially reported. [This] was limited to emissions made between 2020 and 2022, a window that captures the cusp of the AI boom but not the staggering heights that it’s reached now.

…[T]he biggest liar of the bunch appears to be Meta. In 2022, Meta’s official emissions from in-house data centers was just 273 metric tons of CO2. But using location-based data, that soars to 3.8 million metric tons — a 19,000 times increase, in case you were wondering.

And I was listening to the podcast Hard Fork where they were discussing the changes in OpenAI’s new o1 model and how much more processing power they had to throw at it. “For every query they have to chop down another tree,” they laughed at one point with no bit of shame.